Internal of Hadoop Mapper Input

Well I just got a requirement to somehow change the input split size to the Mapper , and just by changing the configuration didn't help me lot, so I moved further and just tried to understand exactly whats inside -

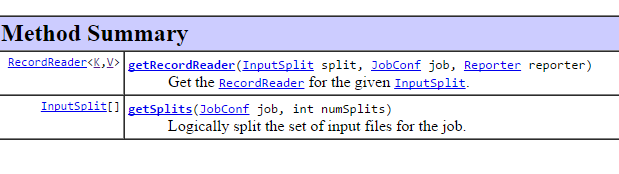

So above the Job flow in Mapper and the 5 methods are seriously something to do with split size.

Following is the way , an input is being processed inside Mapper -

- Input File is split by InputFormat class

- Key value pairs from the inputSplit is being generated for each Split using RecordReader

- All the generated Key Value pairs from the same split will be sent to the same Mapper, so a common unique mapper to handle all key value pairs from a specific Split

- All the result from each mapper is collected further in Partitioner

- The map method os called for each key-value pair once and output sent to the partitioner

- so now the above result in partioner is actually further taken into account by Reducer.

So Now I found the class InputFormat to just introduce my change and that is based on my requirement.

But further checking the exact class helped me more -

@Deprecated

public interface InputSplit extends Writable {

/**

* Get the total number of bytes in the data of the <code>InputSplit</code>.

*

* @return the number of bytes in the input split.

* @throws IOException

*/

long getLength() throws IOException;

/**

* Get the list of hostnames where the input split is located.

*

* @return list of hostnames where data of the <code>InputSplit</code> is

* located as an array of <code>String</code>s.

* @throws IOException

*/

String[] getLocations() throws IOException;

}

Further there few more things to check like TextInputFormat , SequenceFileInputFormat and others

Hold On.. We've RecordReader inbetween which splits the input in Key-value and what if I got something to do with it-

RecordReader.java Interface

We can find implementation of RecordReader in LineRecordReader or SequenceFileRecordReader.

Over there we can find that input split size crosses boundary sometimes, and such situation is being handled , so custom RecordReader must need to address the situation.

1 comment:

Hi, This is Yasmin from Chennai. Thanks for sharing such an informative post. Keep posting. I did Big Data Training in Chennai at TIS academy. Its really useful for me to know more knowledge about Big Data. They also give 100% placement guidance for all students.

Post a Comment